Seth Redmore Seth Redmore |

Just over two-thirds of Americans managed to read a book last year. But that’s okay: more and more, machines are doing our reading for us.

From news articles to press releases, machines are being used to evaluate what’s happening in the world. But they also have a very particular, blinkered way of interpreting results.

In some instances, that’s a good thing. It helps to have a disinterested party weigh in on political debates or sports, for example. In others, it’s less of an asset. Humor, sarcasm and satire pose challenges for machines, making them terrible at picking out whether a news article is from the New York Times or The Onion.

When it comes to nuance, machines are dense. This problem is being addressed by AI vendors that specialize in text analytics, but given the number of people around me who don’t get my jokes, it’s going to take a while. If people miss the joke, then how can we teach machines to understand them?

Machines are the most voracious, tireless readers among us. So, while AI folks work on the understanding, it’s important for anyone writing content to consciously write for interpretation by machine as well as being read by humans.

So what makes how we read so different from how machines do it?

The big difference is context. Human interpretation is contextual and variable. What we pull out of a piece of text can change depending on what’s going on in the world, whether we have a vested interest in the topic at hand, or whether we’ve had our morning coffee. Give the same piece to a machine, and you’ll get the same result every time.

Humans are emotional, variable and unreliable. We’re imperfect interpreters. And we’re imperfect communicators. But we also have experience, context, bigger picture intuition and the ability to “repair” miscommunications in our favor. Machines don’t.

The logical leaps and informational synthesis that make us good at solving problems and participating in witty banter are skills that machines haven’t yet mastered. Let’s not put people on a pedestal, though: there are a number of studies that show numbers like an 80 percent agreement between “well-trained” scorers as to whether a sentence is positive or negative. Think about that for a second — at least 20 percent of what you’re saying is being misinterpreted as wrongly positive or negative. And that’s the best case scenario.

Machines might get that something’s a joke, but they won’t actually get the joke. That’s because humor is dynamic, contextual and domain-based. We’ve all experienced being the newcomer trying to laugh along with a group’s in-jokes.

The reason we don’t get them is mostly because we don’t understand the context around the jokes: the references, the situations, the reason cousin Tommy has the nickname he does. For a machine, that’s daily life. Dynamic context is an important area of Artificial Intelligence research — understanding the impact of who the speaker is, where they live, how old they are, the topic of conversation, as well as to whom the speaker is speaking — as we change our language depending on who we’re talking to and our relationship to them. I curse a lot around my peers, but you better believe that I’m much more careful when I’m teaching a class of kindergarteners or speaking about a topic that requires gravitas.

Humans also sense whether something is creatively unique or brilliant. There are pieces that resonate with us, that make us feel a particular way. We have a sense of the rhetorical, a sense of what’s powerful — and we can all channel it. At some point, every one of us has spoken or written a sentence that the world’s never heard before.

One of the unfortunate truths of machine learning is that machines can only compare what they’re reading with what’s come before. So, if you give them something truly new or different, they won’t know what to do with it until they have the data to compare it against.

In sum, machines are emotionless, have issues with context switching, and tend to be very, very literal. But they’re superb at sentence diagramming. And they’ll hang on your every word.

So how do we write something that machines can read and actually understand?

We don’t have a Strunk and White for robots yet, but one’s going to be sorely needed. Here’s our start:

Keep it simple. Stick to the facts. Avoid extraneous information and flights of fancy.

Go easy on the humor. Friendly and personable are one thing. But sarcasm and jokes can obscure meaning and make interpretation difficult.

Pick the right medium. Matter-of-fact press releases and reviews are ripe for analysis and rich with opportunities to slightly tune your language so that it will be interpreted more positively or negatively. There are lots of contexts present in the article itself. Short-form social media, on the other hand, can be much tougher because there isn’t much context in the post itself. Having the context of attached articles or previous tweets, as well as other ethnographic knowledge can help. As an aside, when you’re filling out one of those restaurant comment cards — be very clear and simple with your comment — it has a much better chance of being interpreted correctly.

Be clear and balanced. Active language can be easier to parse. But it’s easy to let emotion get the better of you — and differentiating one emotion from another isn’t as easy as it seems. If you are emotional about something, be very clear about your emotion. “I’m angry that my server didn’t peel my grapes correctly, so I’m never returning to this restaurant.” As opposed to “I wish they’d done my grapes better than they did, and I’m not sure whether I want to return there or not.”

Oddly enough, these tips aren’t so far off Kurt Vonnegut’s tips on writing. And given that he touched on writing for machines as far back as his debut novel “Player Piano,” perhaps he was on to something.

Scoring sentiment — how do the bots fare?

Let’s take a look at a text written two ways and see what a machine has to say about it:

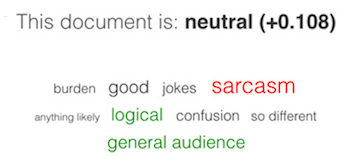

“Writing for machines is not so different from writing for a general audience — just follow the hallmarks of good writing. Be clear, concise and to the point. Keep your flow logical and your ideas consistent. Avoid jokes, sarcasm or anything likely to cause confusion. The aim is to communicate, after all. So don’t burden your audience with the need to translate.”

Our system pulls out the following document sentiment and important phrases.

|

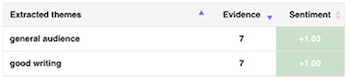

Our system also recognizes the following important themes, which summarize the important points in that piece of text:

|

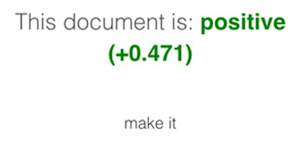

Now, let’s compare it to the same basic message, but written very differently:

“Wordsmithing for people vs wordsmithing for a brain of bits and bytes — what’s the difference? Less than we’d like. One audience is short on time, and the other doesn’t even have a concept of time. Find a point and make it — sharp. Say non to non sequiturs. And don’t take that tone with them — or mention that time about that thing. Because it’s your job to write, and theirs to skim read. So KISS.”

|

Note the lack of pickup of important phrases, and the seemingly overall positive tone.

Different text analytics systems will interpret these documents differently, and you may see a word or phrase you disagree with. That’s fine. This is purely for illustrative purposes, to show that by carefully choosing appropriately interesting phrasing combined with a nod towards machines you'll have the best chance of both engaging your human readers and getting the sort of machine analysis that you desire.

Machines are the most voracious, tireless readers among us. So, while the AI folks work on the understanding, it’s important for anyone writing to consciously write for interpretation by machine as well as by humans.

***

Seth Redmore is CMO of text analytics leader Lexalytics.

Abandon traditional content plans focused on a linear buyer progression and instead embrace a consumer journey where no matter which direction they travel, they get what they need, stressed marketing pro Ashley Faus during O'Dwyer's webinar Apr. 2.

Abandon traditional content plans focused on a linear buyer progression and instead embrace a consumer journey where no matter which direction they travel, they get what they need, stressed marketing pro Ashley Faus during O'Dwyer's webinar Apr. 2. Freelance marketers and the companies that hire them are both satisfied with the current work arrangements they have and anticipate the volume of freelance opportunities to increase in the future, according to new data on the growing freelance marketing economy.

Freelance marketers and the companies that hire them are both satisfied with the current work arrangements they have and anticipate the volume of freelance opportunities to increase in the future, according to new data on the growing freelance marketing economy. Home Depot's new attempt to occupy two market positions at once will require careful positioning strategy and execution to make it work.

Home Depot's new attempt to occupy two market positions at once will require careful positioning strategy and execution to make it work. Verizon snags Peloton Interactive chief marketing officer Leslie Berland as its new CMO, effective Jan. 9. Berland succeeds Diego Scotti, who left Verizon earlier this year.

Verizon snags Peloton Interactive chief marketing officer Leslie Berland as its new CMO, effective Jan. 9. Berland succeeds Diego Scotti, who left Verizon earlier this year.  Norm de Greve, who has been CMO at CVS Health since 2015, is taking the top marketing job at General Motors, effective July 31.

Norm de Greve, who has been CMO at CVS Health since 2015, is taking the top marketing job at General Motors, effective July 31.

Have a comment? Send it to

Have a comment? Send it to

No comments have been submitted for this story yet.