Many Americans who share misinformation on social media sites do so simply because they don’t conduct the necessary research to verify whether or not that content is accurate, according to a study conducted by a collaboration of researchers from MIT, Canada’s University of Regina, the University of Exeter Business School in England and Mexico’s Center for Research and Teaching in Economics.

Through a series of studies dedicated to understanding why people share misinformation online, researchers determined that Americans might circulate so much fake news not because they deliberately want to misinform others nor because they can’t separate accurate content from content that’s false. Instead, there seems to be a disconnect between what Americans believe to be true and what they share with others—that outside factors such as partisanship may outweigh the perceived accuracy of what they choose to post.

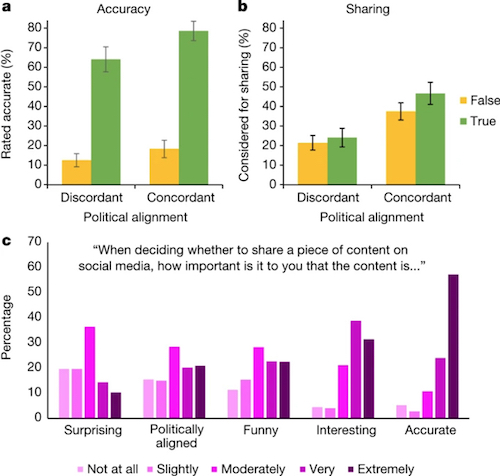

In one study, researchers recruited about 1,000 participants and presented them with a series of news story headlines. Half of the headlines were true and the other half were false. Asked whether or not they thought the headlines were accurate, survey participants overwhelmingly rated the true headlines as accurate more often than the false headlines.

|

| A study published in Nature found that Americans want to share news content that’s true, but that we’re much less discerning about what we choose to share with others than our ability to determine whether or not the content that we’re sharing is accurate. |

But when it came to whether participants would consider sharing these headlines with others on social media, researchers discovered those intentions to be much less discerning. Despite an overall desire to share only accurate content, partisan alignment was found to be a significantly stronger predictor of whether a story would be shared than a headline’s veracity. In other words, people appear more likely to share items in accordance with their political beliefs and not because they believe the headline to be true.

Still, nearly 60 percent of survey participants said it’s “extremely important” that the content they share on social media is accurate, and 25 percent said it’s “very important.” So, what’s behind the disconnect between users’ accuracy judgments and what they share? Owning less to a desire to deliberately misinform others, the study found that being merely distracted may play a central role in social media users’ sharing intentions.

In another experiment, participants were asked to assess the accuracy of a headline before deciding whether or not they would share it. When a false headline was chosen to be shared on social media, the study found that more than half of participants who did so (51.2 percent) blamed a mere lack of attention. A third (33.1 percent) chalked up the mistake to simply not realizing the headline was inaccurate. Only 15.8 percent admitted that some personal preference—such as partisanship—was behind their decisions to deliberately share a false headline.

In other words, the study suggests that most people’s beliefs may not be as partisan as their social media feeds indicate, and that prompting social media users to instead think critically about the accuracy of what they post might improve the quality of the content they share.

One way to do so, the study’s authors suggest, would be for social media platforms to make several design-based changes—including what they refer to as “scalable attention-based interventions”—which may help dissuade the widespread problem of misinformation sharing online.

“Our results suggest that the current design of social media platforms—in which users scroll quickly through a mixture of serious news and emotionally engaging content, and receive instantaneous quantified social feedback on their sharing—may discourage people from reflecting on accuracy,” the study’s authors conclude. “But this need not be the case. Our treatment translates easily into interventions that social media platforms could use to increase users’ focus on accuracy. For example, platforms could periodically ask users to rate the accuracy of randomly selected headlines, thus reminding them about accuracy in a subtle way that should avoid reactance (and simultaneously generating useful crowd ratings that can help to identify misinformation). Such an approach could potentially increase the quality of news circulating online without relying on a centralized institution to certify truth and censor falsehood.”

The study, “Shifting attention to accuracy can reduce misinformation online," was published in science journal in Nature. Respondents were sourced through Amazon’s crowd‐sourcing service MTurk.

What if companies could harness the fury of online outrage into a force for good? This is precisely where companies can start turning the trolls into brand champions.

What if companies could harness the fury of online outrage into a force for good? This is precisely where companies can start turning the trolls into brand champions. Audiences interacted with brand content far more often on Facebook and Instagram in 2023 than they did via X (formerly Twitter), according to a report that tracked engagement trends across different social networks.

Audiences interacted with brand content far more often on Facebook and Instagram in 2023 than they did via X (formerly Twitter), according to a report that tracked engagement trends across different social networks. Can public relations help counteract the dissension fostered by the power of digital platforms to spread hate, fear and confusion?

Can public relations help counteract the dissension fostered by the power of digital platforms to spread hate, fear and confusion? The number of Americans who get their news from TikTok has quadrupled in the last three years, according to a recent Pew Research Center report.

The number of Americans who get their news from TikTok has quadrupled in the last three years, according to a recent Pew Research Center report.

Have a comment? Send it to

Have a comment? Send it to

Mar. 25, 2021, by Joe Honick

Did they really have to spend all that time and money to figure out that so many people want to engage in spreading stuff they either want to spread because it sounds just like their prejudices or whatever, but the real obvious influence is simply the need to get in the stream of gossip. The idea of the masses doing "research" would probably surprise most marketing folks who want simply to tease and tantalize about the same that political dirt dispensers do. As to concerns about reliability of news, I think you not long ago accurately indicated the lack of trust by even literate consumers of all media.